Generative AI overview

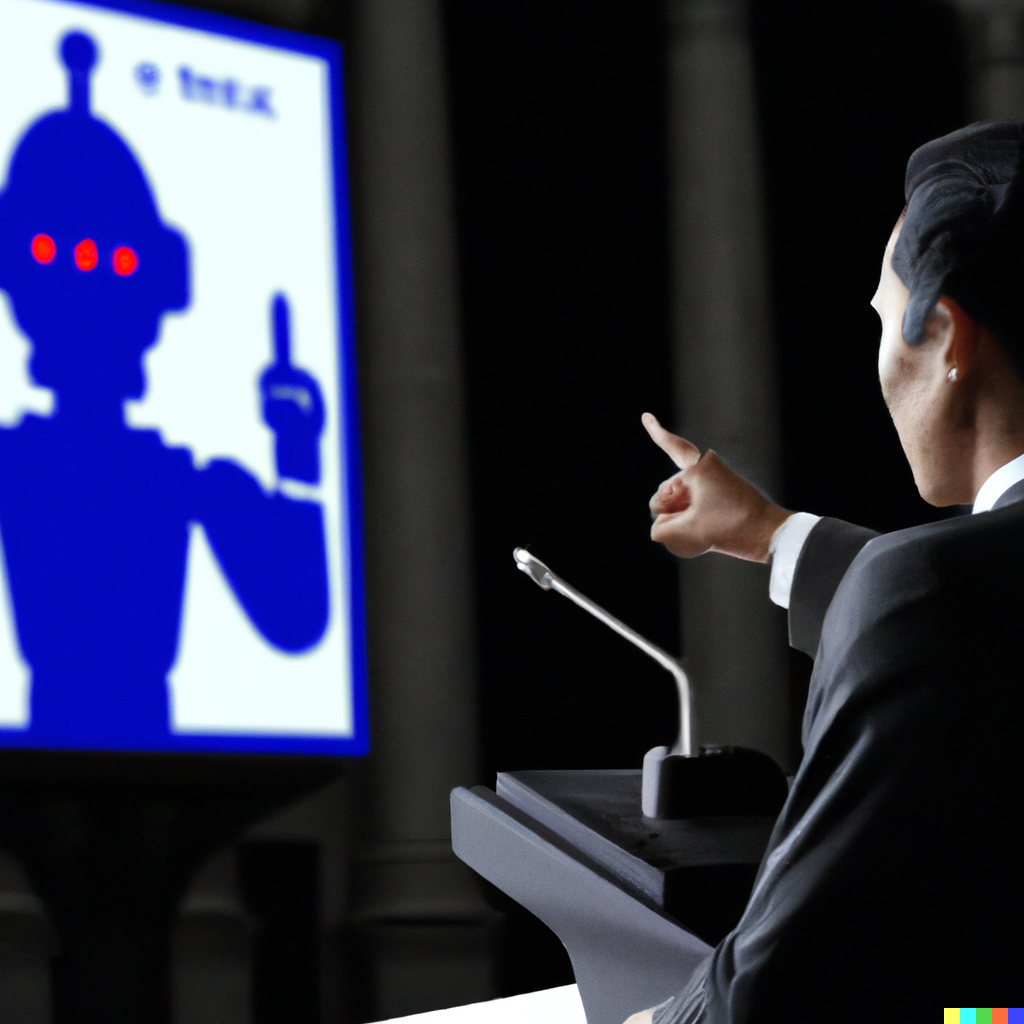

Dall-E2 Input: “A politician interacting with artificial intelligence” - output image shared above.

This overview has been written by applying human intellect, research and use of generative AI (for research purposes) to highlight some of the key messages. This is not an exhaustive artefact but provides sufficient context to warrant further investigation into this subject. WeBTG welcomes the opportunity for further dialogue to address this topic in a comprehensive manner.

What is Generative AI?

Artificial Intelligence (AI) creates tremendous opportunities going forward with implications on employment, innovation, economic and competitive advantages, but the risks of adverse implications of AI are equally valid – be it loss of existing jobs to automation, violation of privacy, breach of intellectual property rights and data security breaches to name a few.

Readily available and more affordable compute power along with exponential growth in datasets has led to great advances in AI. The breadth of AI is wide and varied, and covers different technologies like Chatbots and Digital assistants, Machine Learning, Natural Language Processing, Neural Networks and Robotics.

Generative AI is a prominent branch of AI thanks to vendor offerings like ChapGPT gaining global popularity at phenomenal pace since late 2022. In simplistic terms, as its name suggests, this type of AI technology can help users generate new content – be it text, images, video, code or even music – without requiring any specific technical knowledge or skill on the part of the user. These solutions work by feeding large datasets to train the underlying Large Language Models (LLMs) so that meaningful content can be generated as an output. As it stands today, the output can never be guaranteed to be 100% accurate but to overcome this, an organisation looking to use generative AI can for instance use these LLMs alongside an organisation’s website to provide more accurate responses via a chatbot interface.

What can you do with generative AI?

Generative AI can be used to generate:

- text output e.g. ChatGPT is a popular chatbot interface that generates texts of various quality and quantity based on the query inputted by the user. From generic or simplistic answers to essays and summarised insights, these generators provide output in a matter of seconds.

- image output e.g. Midjourney, DreamStudio and Dall-E 2 – similar to the text generator, these solutions can create images based on description provided in the user interface. The quality of output is somewhat subjective and there are some challenges that persist today e.g. rendering of fingers and hands in images is not a capability that is quite mastered at this stage. Case in point demonstrated by the hand generated in the output image above compared to the rest of the picture.

- video output e.g. Google’s DeepBrain AI provides a script-to-video generator and the examples shared here demonstrate how capable this technology is.

- sound output e.g. Sonatic (now acquired by Spotify) generated Val Kilmer’s voice for the movie Top Gun: Maverick. In another experiment, using self composed inferior quality lyrics and sampling based on Kanye West singing voice, Roberto Nickson was able to create a song that sounds like the artist himself.

- sound-to-text output e.g. Whisper (also from OpenAI) provides speech recognition capabilities and could be used to transcribe text and/or translate the output in multiple languages.

Use-cases are vast and varied – the extent to which these become real or mainstream remains to be seen. A few examples are shared here for context.

Marketers can synthetically generate outbound messages, enhancing potential for upselling and cross-selling. Graphic designers can generate images for commercial use in advertising, media, marketing, educational and promotional materials and so forth.

Customer Service interactions through chatbots improves and delivered at a lower cost, with higher quality of service offered via AI-based engagement. Online chatbots can now be enhanced with online avatars that can act as a virtual assistant for your brand or website.

Government agencies including local government could use generative AI for policy development, summarisation of large amounts of data as well as provide enhanced chatbot interfaces for better service provision to the citizens and the wider community. Based on current capabilities of generative AI, the human should always be in the loop whilst using the technology to augment service delivery capacity and capabilities.

Modelling agencies & Fashion houses can now use generative AI to create the next catwalk model – yes you read that right. The essence of a fashion show is not for live human interaction & consumption per se but more so for scaling up for the masses via photography and videography. Now, any type of fashion model can be seemingly generated using text-to-image generative AI for instance. And it also extends to mobile apps like Lensa that offer curating selfies being turned into various type of avatars. From Lensa: “Improve facial retouching with a single tap of Magic Correction” (Lensa uses Stable Diffusion, an open-source AI deep learning model from Stability AI, which draws from a database of art scraped from the internet – more or that later)

The adoption of generative AI technology is the fastest known in human history – various sources suggest ChatGPT has gained over 100m users within a month or so. In comparison it took TikTok 9 months, Instagram 2.5 years and Twitter about 5 years respectively to reach this same milestone. Generative AI is coming at you faster than you may know it!

And in line with this explosion, a large number of vendors are also reacting in kind in a “me too” mode – if a vendor does not have an AI strategy as a focus item in 2023, they are or should seemingly be scrambling to get into it. Here is a sample of recent announcements in this space – as an initial approach some of these technologies have been released in beta and/or in particular geographies only.

OpenAI, provider of the popular ChatGPT and Dall-E generators, struck up a partnership with Microsoft earlier this year (expect to see generative AI capability in your everyday use of Office and other tools - the recent Copilot announcement provide more details of how Microsoft seeks to embed OpenAI capabilities into its offerings).

Likewise Google also announced competing capabilities to its Google Workspace solutions and provides other generative AI capabilities as well e.g. Bard is a chatbot that serves generated responses from the internet much like ChatGPT, but differs to a search engine, which presents outputs in the form of websites and links).

Nvidia & Adobe have partnered to form new generative AI workflows into offerings like Adobe Photoshop and Picasso Cloud service

Stability AI develops open AI models for image, language, audio, video, 3D, and biology. It is the developer of Stable Diffusion, a powerful, free and open-source text-to-image generator. And its partnered with AWS to power its AI technology.

and the list goes on.

What are the positive and negative implications of using generative AI technologies?

1) Productivity – at a very basic level, generative AI can improve productivity in many ways. From searching for answers quickly to getting emails drafted to even getting larger text bodies like essays written for you, all by simply inputting a text narrative into the user interface and the output being provided in a matter of seconds. Its the immediacy of results produced in a very short time that makes generative AI a productivity game changer in many different ways.

2) Jobs displacement. At its extreme, this could impact labour markets at an unprecedented scale – a recent report from Goldman Sachs suggests 300m jobs affected globally. With the pace of adoption and the choices on offer, it is only a matter of time that several organisations will start using these technologies. And depending on how these technologies get embedded, it undoubtedly has implications on jobs across different sectors – either as a tool to augment staff capability and capacity or outright replacement of staff numbers in some cases. A few examples include:

Media organisations reducing or replacing swathes of production and anchorage crews because text-to-video capabilities allow them to create curated video content quickly and at scale. An alternate and potentially cheaper option but time shall tell if the recipient audience readily accepts or rejects such output.

The film industry creating virtual movies that impacts employment demand for actors, screen and script writers, production crews and so forth. Again, a new genre of movie production could be on offer but will the content & quality appeal or repeal the movie critics and paying customers.

Education providers potentially replacing human teachers with online avatars presenting virtual content instead. Teaching could be scaled economically but does this create a value-for-money offering for students keen to socialise and learn from in-person experiences with their peers? On evidence from the post-pandemic era where this was the only viable option under the lockdown constraints, many students have been left jaded and have questioned the value of the education experience received.

Fashion houses requiring lesser human models or modelling agencies for that matter too, not to mention photographers and other roles typically used in the way fashion shows are run today. Some concerns have been raised in this regard already.

Analytical jobs in research, consulting, legal, etc sectors being reduced, with generative AI content replacing tasks that a human has traditionally carried out, at least on the basic level involving gathering and analysing numerous data points across wide number of datasets.

Whilst governments and providers at large in the past few years have been pushing for increased exposure to technical skills like coding in all tiers of education, generative AI can now write code. Could this imply the world needs lesser volume of code developers? Perhaps.

Vendors like Open AI are also a tad bit modest in their research of how solutions like ChatGPT can affect jobs, though it could be perceived as a somewhat “biased point of view” as it eases any negative impacts of job displacements as the technology attains greater adoption.

The actual impact could well be a more moderated version of the above depiction. Across the past few decades, from historical examples like the internet and computers impacting typewriters and printing services, to the future where new technologies and related industries emerge to solve for climate change issues, humans continue to innovate and evolve. And so will some of the existing job types.

3) Potential breach of IP & liability – generative AI requires the training of AI models using existing datasets, to then be able to generate meaningful output. How the developers of these technologies go about training the models is somewhat of a grey area – can they guarantee that data scrapped from internet sources for the purposes of training is not breaching any copyright or IP jurisdictional boundaries? Stability AI, the provider of the popular DreamStudio text-to-image generator has a case filed against it by Getty Images for the alleged use of Getty images to train its AI models. Broader legal ramifications of generative AI as a topic is discussed further here.

4) Data Privacy – Whilst Australia does not have any specific legislation regarding AI and other technologies like Big Data and automated decision making, existing laws and possible cross-border considerations should be undertaken nonetheless.

The role of datasets used for training AI models, the use of generative AI to provide meaningful output, the use of this technology by end customers & citizens alike, requires further investigation as how organisations handle data privacy. “My right to be forgotten” as an end customer exercising their rights may not be easily achievable when using generative AI for instance.

As offerings evolve into products beyond current beta solutions, the roles of the AI developer, the vendors (especially those who could be using a 3rd party vendor AI solution as an embedded offering e.g. Microsoft’s use of OpenAI solutions), the organisational staff and end customers/citizens - all interacting with any generative AI technology collectively have to be examined further. Setting up governance controls and enforcement as well as handling of privacy concerns could be challenging to implement.

5) Security – the breadth of exposure here is broad. Foreign hackers are now armed with the ability to refine their phishing skills as generative AI can provide them with more persuasive english language constructs and content to target unsuspecting victims. It also provides them the ability to generate code for malicious intent. Generative AI is also open to the creation of deepfakes and identity spoofing - ascertaining what is real vs generated therefore could become a growing concern in different process workflows of life that we interact in e.g. facial recognition and its use in identity management workflows. Whilst OpenAI and several other vendors are continuously building guardrails into their platforms to ensure these technologies are not used for creating harmful content, there is no guarantee that adverse effects or intent might not slip through the filters so to speak. There are some guidelines as provided by NIST for instance, but these need to be applied in a self-regulated manner at an individual organisational level.

6) Accuracy of generated content and context – generative AI, whilst appearing to be generally very capable on first impressions for a lot of use cases, can still produce a incorrect content and/or provide an incoherent context if not trained and used appropriately. After all, the underlying LLMs are only as good as the datasets informing the training of these models. And training LLMs is by no means an easy task. Terms like hallucinations and bias are often uttered, especially when the AI models do not produce expected outcomes.

How should organisations approach the advent of this new technology?

Advances in generative AI should be celebrated as a marvel of human creativity. Ironically, this very innovation actually targets the one key differentiator between humans and machines – the art of being creative. With machine-generated outputs overlapping into to the human creativity domain, the impact to our society in this context and the accompanying protective measures that are warranted, are worth exploring further.

All organisations, be they commercial enterprises or government agencies, should establish an ethical policy framework around the responsible use of both the generative AI technologies as well as numerous public and private datasets. It raises many questions that could catch organisations by surprise, whether the ramifications, both positive and negative, are in the legal, social, political or data privacy & security realms. Every organisation therefore needs to investigate use of generative AI in a considered manner – this is not the sole responsibility of the organisation’s IT department to handle. This is not a technology conversation. Both top-down and bottom-up leadership and dialogue are required to progress on this front. One thing is certain – the world as we know it is changing rapidly with the evolution of generative AI and this warrants immediate action.

PS all references used in this write up have themselves been written in the past month or so – the breadth and speed of the evolution of generative AI is unreal.